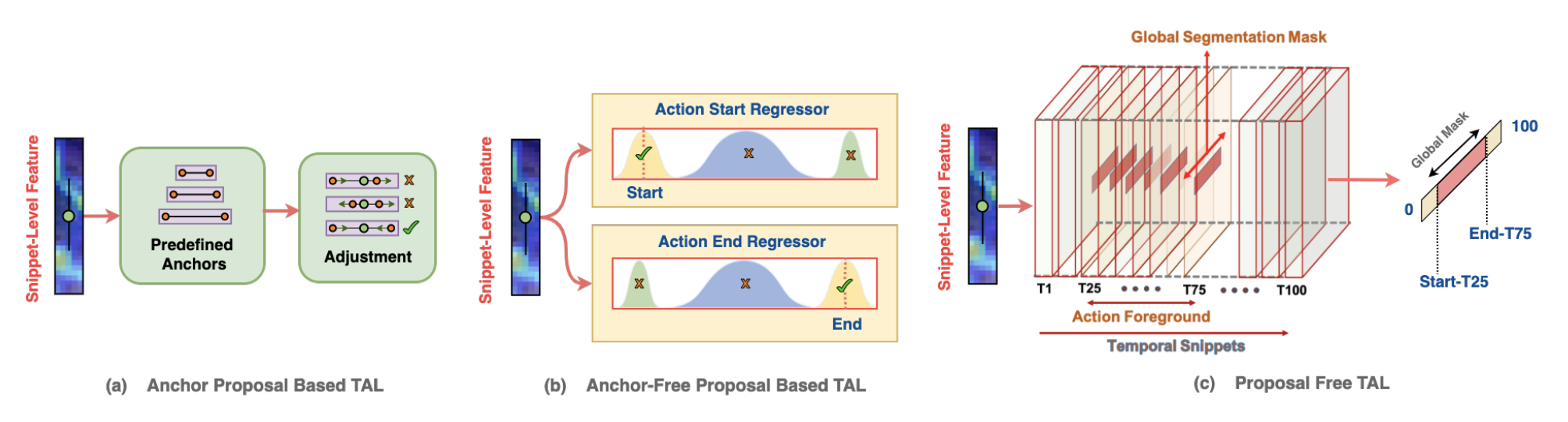

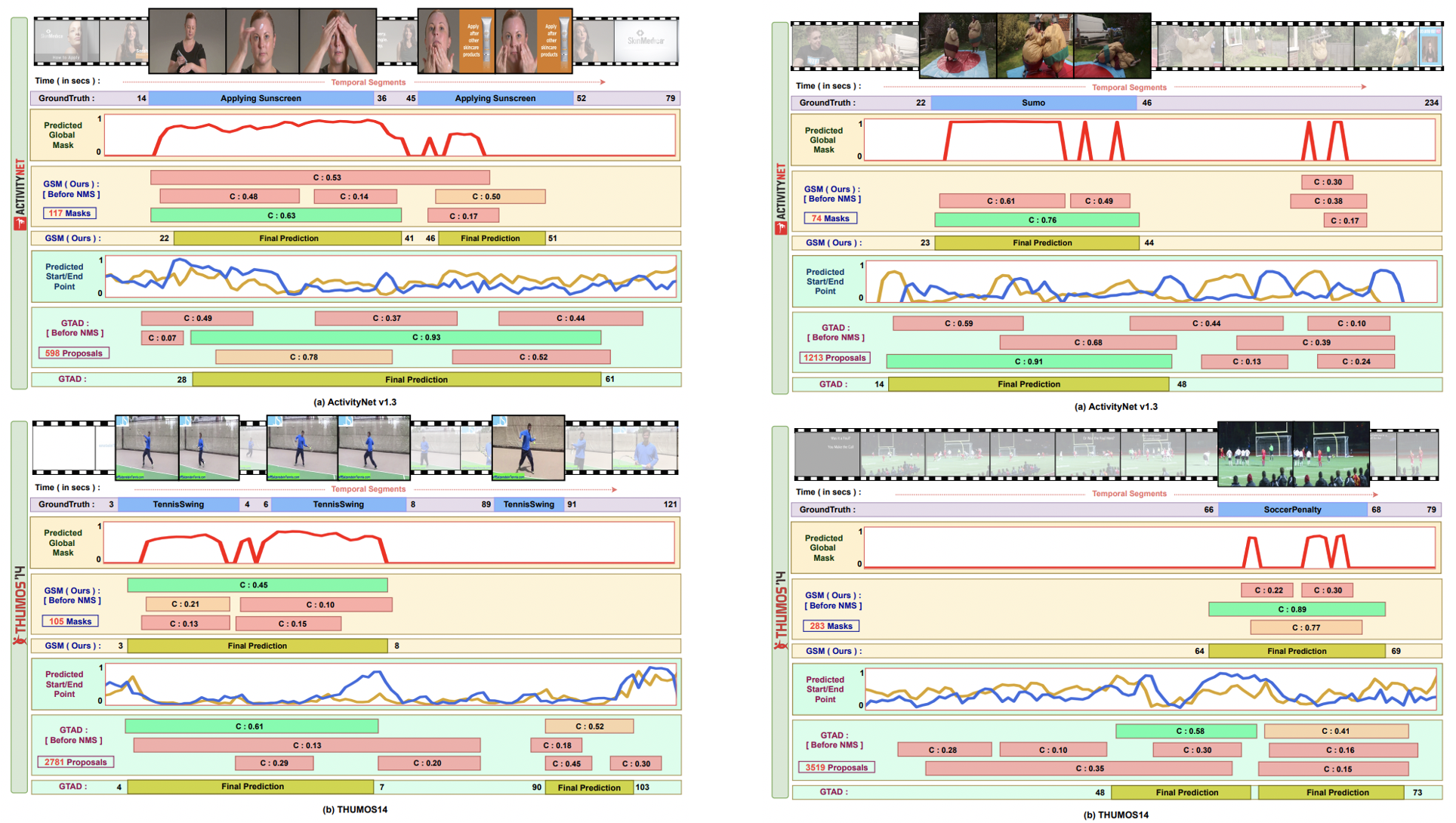

Anchor-Based Approaches

Motivated from Anchor-based Object Detection (e.g. Faster RCNN). Such methods produces a large number (~8K) of action proposals (i.e temporal boundaries) for a given video and then adjusted to predict foreground. Then such foreground proposals are used for action classification.